SpeechAmbient

Ambient AI understanding clinical conversations - effortlessly.

Understanding clinical conversations

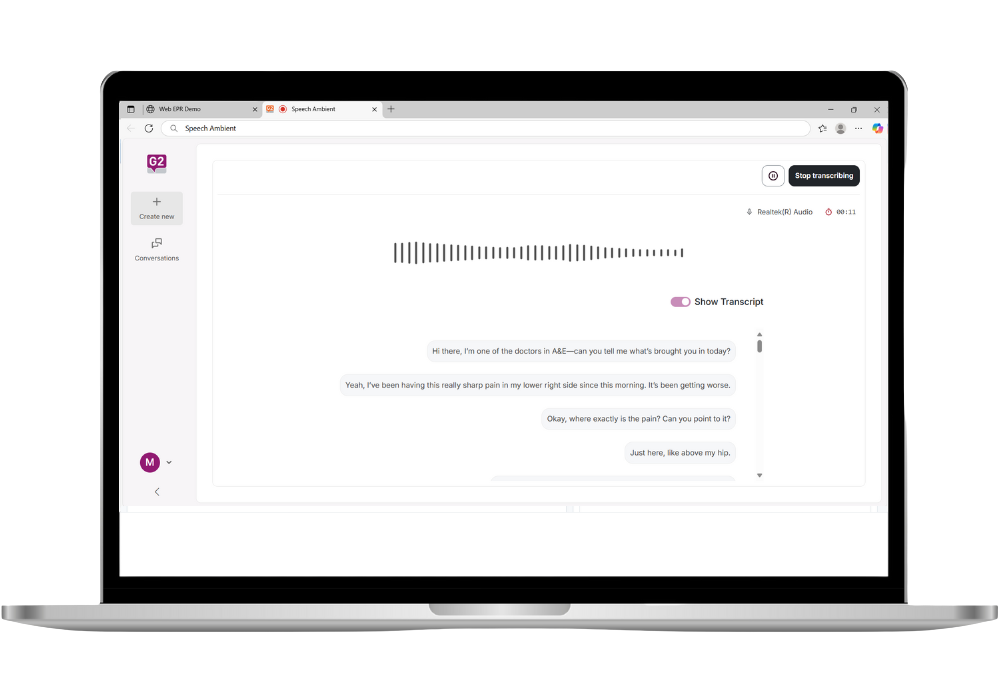

SpeechAmbient captures real clinical conversations - naturally and intelligently behind the scenes - and transforms them into accurate, structured documentation. No need to pause, dictate, or re-type. Naturally recognising and formulating notes as you have patient consultations.

Powered by advanced ambient speech intelligence, SpeechAmbient listens in the background, identifies speakers, and generates summaries that reflect real-world clinical dialogue - saving time, reducing admin, and freeing up focus. Ideal for patient - doctor consultations and Multi-Disciplinary Meetings.

SpeechAmbient benefits from Atlas Aura, state-of-art, highly specialised, medical speech technology.

Atlas Atlas leverages AI with Neural Networks and Deep Learning Technology – providing not just content but transforming clinical conversations into structured medical documentation.

- Medically specialised AI-speech recognition: built for clinical precision

- Live multi-speaker transcription: Captures patient-clinician conversations in real time

- Deferred audio upload: Securely upload recordings (handovers or remote consults) for later transcription

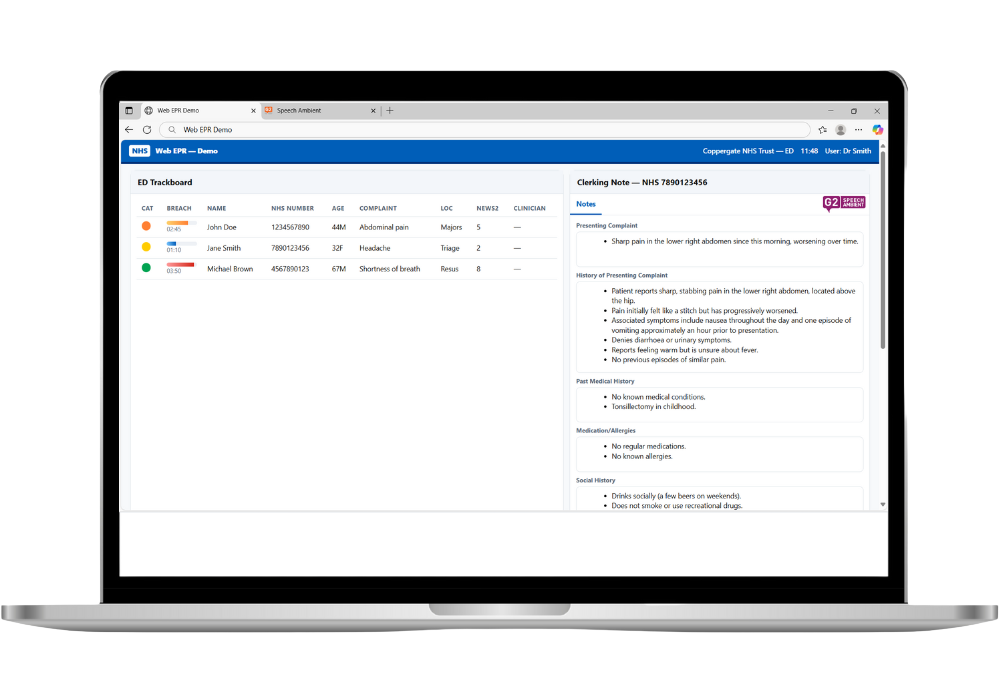

- Automatic note generation: Converts spoken conversations into structured summaries - ready for review and sign-off

- Custom templates: Tailor formats to your specialty, clinical workflow, or compliance standards

- Browser-based: Runs in any modern browser - no installs, no IT complexity

- EHR-ready: Download, copy, or send outputs directly to your EHR or documentation platform.

A smarter way to capture consultations

SpeechAmbient helps you stay present with your patients, while it quietly handles the notes in the background.

Ambient AI - transforming natural conversations into accurate, structured clinical documentation - without the need for structured, active dictations.

Why clinians love it:

- Exceptional speech recognition with clinical precision

- Hands-free documentation

- Enhanced patient interaction.

- Time saving for clinicians

- Minimal administration effort

- Structured documentation output.

SpeechAmbient records real clinical conversations in real time - no dictation, no disruption.

Automatically transforms dialogue into accurate, EHR-ready clinical notes tailored to your workflow.

Built on Atlas Aura, medically-specialised speech technology, delivering reliable, multi-speaker transcription and accuracy.

Watch SpeechAmbient in action

(Video placeholder - new demo coming soon)

Want to see it live?

Book a quick, no-pressure walkthrough

“With its robust medical dictionary, intelligent voice recognition, and AI-driven correction features, SpeechCursor allows me to dictate, edit, and authorise reports in a single streamlined step—saving time and significantly enhancing my workflow.”

“SpeechReport is a fast electronic speech recognition system that improves the quality of patient care and experience, by the production of documents following patient episodes. It relieves the necessity for clinicians to spend hours on admin and frees up time for delivery of care to patients.”

“It takes the pressure off having to jot notes during a session, allowing me to focus more fully on the patient and observing their movements.”

“SpeechAmbient makes it quicker to write notes at the end of a session and captures the key points accurately, reducing the need to rely on memory during busy clinics.”

“It's helpful to use a template developed with a facial therapist rather than a standard SOAP note format. The notes are concise and clinically relevant."

.jpeg?width=1024&height=1024&name=Image%20(6).jpeg)